Publications

A collaboration with the FDA: new insights using real-world evidence

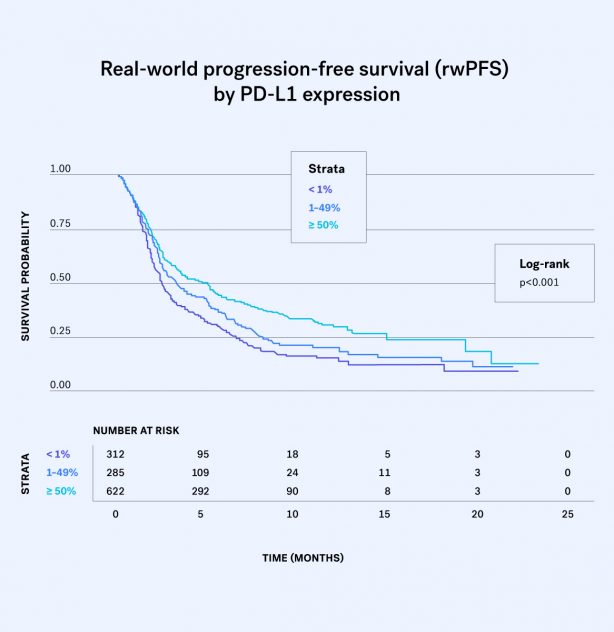

This latest study applies our method of assessing disease progression in real-world cancer datasets to a large cohort of patients with advanced NSCLC treated with PD-1/PD-L1 inhibitors...

Innovation Meets Empowerment: The Future of Data Science

| Miscellaneous

Defining Empowerment in Life Sciences Data Science

Empowerment in data science extends beyond simply providing access to tools and technologies. It involves creating an environment where researchers, clinicians, and decision-makers can effectively leverage data assets to drive meaningful outcomes. Within life sciences organizations, this empowerment takes several distinct forms:- Democratization of data access

- Self-service analytics capabilities

- Cross-functional data literacy

Innovation Pathways in Biomedical Data Science

Innovation in data science in life sciences materializes through several interconnected pathways that collectively transform how organizations derive value from their data assets:- Advanced analytics and AI integration

- Real-world data utilization

- Integrated knowledge management systems

Strategic Data Governance

Empowerment begins with a solid foundation of data governance. The following strategies illustrate how life sciences organizations can manage data responsibly while ensuring meaningful access and utility.- Balancing accessibility and compliance

- Data quality management

- Metadata management and data cataloging

Innovation through Advanced Analytical Approaches

Innovation in data science is driven by advanced analytical methods that uncover new insights and opportunities. Below are some of the key analytical innovations transforming life sciences research and development.- Computational biology and bioinformatics services

- Graph-based analytics for relationship discovery

- Natural language processing for scientific literature

Transforming Research through Data-Driven Decision-Making

At the core of innovation and empowerment lies data-driven decision-making, reshaping the way life sciences organizations conduct research and develop therapies. The examples below highlight how integrated data insights lead to more informed, efficient, and effective scientific outcomes.- Target identification and validation

- Clinical trial optimization

- Precision medicine and biomarker development

Organizational Enablers of Empowerment & Innovation

For empowerment and innovation to take root, organizations must cultivate supportive environments. The following enablers represent critical elements that foster a culture of data-driven success across life sciences enterprises.- Collaborative infrastructure

- Talent development and retention

- Executive leadership and cultural alignment

How Large Language Models Are Reshaping Bioinformatics

| Miscellaneous

- Understanding LLMs in the context of biological data

- Protein structure prediction and analysis

- Genomic analysis and interpretation

- Drug discovery and development

- Medical literature analysis and knowledge integration

- Challenges and considerations

- The future direction of LLM in bioinformatics

Understanding LLMs in the Context of Biological Data

Large language models are deep learning systems trained on vast text corpora to recognize patterns, generate coherent text, and perform various language-related tasks. When applied to bioinformatics, these models are adapted to work with biological sequences and structures, treating them as a specialized language with its own grammar and syntax. Biological data presents unique challenges compared to human language:- Diverse data modalities - From genomic sequences to protein structures, metabolomic profiles, and electronic health records

- Complex relationships - Intricate interactions between molecules, cells, tissues, and organisms

- Interpretability requirements - Biological data needs to be harmonized and structured to ensure your LLMs can ingest your data.

- Integration needs - Different biological data types must be combined for comprehensive analysis.

Protein Structure Prediction and Analysis

One of the most significant and recognized applications of LLMs in bioinformatics to date has been in protein structure prediction. AlphaFold2, developed by DeepMind, revolutionized the field by achieving unprecedented accuracy in predicting protein structures from amino acid sequences. This breakthrough has been followed by other powerful models like RoseTTAFold, ESMFold, and ProteinMPNN. LLMs are now being used for:- De novo protein design - Creating novel protein structures with specific functions for therapeutic applications

- Protein-protein interaction prediction - Identifying potential binding partners and interaction surfaces

- Functional annotation - Predicting protein functions based on sequence and structural features

- Mutation effect prediction - Assessing how genetic variants might affect protein structure and function

Genomic Analysis and Interpretation

In genomics, LLMs are beginning to transform how we interpret DNA and RNA sequences:-

Variant effect prediction

-

Gene expression analysis

-

Epigenomic analysis

Drug Discovery and Development

The pharmaceutical industry has embraced AI-driven approaches to drug discovery, with LLMs playing an increasingly important role:-

Target identification

-

Molecule generation and optimization

-

Toxicity and efficacy prediction

-

Repurposing existing drugs

Medical Literature Analysis and Knowledge Integration

The biomedical literature is expanding at an overwhelming pace, making it impossible for researchers to stay current with all relevant publications. LLMs excel at processing and synthesizing information from this vast corpus:-

Literature mining

-

Hypothesis generation

-

Clinical trial design

Challenges and Considerations

Despite their promise, LLMs in bioinformatics face several challenges:- Data quality and biases

-

Interpretability

-

Validation requirements

-

Computational resources

The Future Direction of LLM in Bioinformatics

The field of LLMs in bioinformatics is evolving rapidly. Several emerging trends are worth watching:-

Multimodal models

-

Specialized biological LLMs

-

Federated learning approaches

-

Human-AI collaboration

Transforming Life Sciences: The Revolutionary Impact of AI on Bioinformatics

| Miscellaneous

The Convergence of AI and Bioinformatics

Bioinformatics—the application of computational techniques to analyze biological data—has long been essential for interpreting the vast amounts of information generated by modern biological research. However, the landscape is fundamentally changing with the advent of sophisticated AI techniques. Traditional bioinformatics relied heavily on rule-based algorithms and statistical methods, but AI approaches can identify complex patterns and relationships that might otherwise remain undetected and make predictions from complex input data. The synergy between AI and bioinformatics is particularly powerful because biological systems are inherently complex, with countless interconnected components functioning across multiple scales. AI excels at pattern recognition and predictive modeling in precisely such environments, making it an ideal companion to traditional bioinformatics approaches.Machine Learning Applications in Genomic Analysis

- Genome sequencing and assembly

- Variant calling and interpretation

AI-Driven Drug Discovery and Development

- Target identification

- Virtual screening and compound design

- Predicting drug properties and side effects

Personalized Medicine and Clinical Applications

- Patient stratification

- Disease prediction and progression

Challenges and Future Directions

Despite the tremendous promise of AI in bioinformatics, significant challenges remain. Interpretability is a major concern, as many of the most powerful AI techniques function as “black boxes” whose decision-making processes are difficult to understand or explain. This lack of transparency can be problematic in clinical settings where decisions must be justifiable. Data quality and integration also present ongoing challenges. Biological data is often noisy, incomplete, and heterogeneous, making it difficult to combine datasets from different sources or experimental platforms. Federated learning approaches that allow AI models to be trained across multiple institutions without sharing the underlying data show promise for addressing some of these issues. Privacy concerns are particularly acute when dealing with genomic and health data. Techniques like differential privacy and homomorphic encryption are being explored as ways to maintain data privacy while enabling AI-driven analysis. The future of AI in bioinformatics will likely see increased integration of multiple data modalities and biological scales, from molecular interactions to cellular behaviors to organismal phenotypes. Reinforcement learning approaches may enable more autonomous experimental design and execution, potentially accelerating the pace of discovery. AI has fundamentally transformed bioinformatics services and will continue to drive innovation across the life sciences. For pharmaceutical companies, biotech firms, research institutions, and healthcare providers, leveraging these technologies effectively requires not only advanced technical capabilities but also a deep understanding of the biological questions being addressed. Organizations that successfully integrate AI into their bioinformatics workflows can expect to see significant improvements in research efficiency, drug development timelines, and, ultimately, patient outcomes. The potential benefits extend beyond any single institution to advance our collective understanding of biology and human health. Are you ready to harness the power of AI-driven bioinformatics for your research or drug development programs? Rancho Biosciences combines cutting-edge artificial intelligence with deep biological expertise to solve your most complex data challenges. Our team of experienced scientific professionals and AI specialists can help you implement custom solutions that accelerate discovery and drive innovation. Contact Rancho Biosciences today to explore how our tailored bioinformatics services can transform your research and development initiatives.Ensuring Data Integrity in Bioinformatics: The Role of Quality Assurance

| Miscellaneous

Understanding Data Quality Assurance in Bioinformatics

Data quality assurance in bioinformatics encompasses the metrics, standards, and procedures used to validate biological data’s integrity throughout its lifecycle. It involves systematic monitoring and evaluation of data from acquisition through processing, analysis, and interpretation. Unlike quality control, which focuses on identifying defects in specific outputs, quality assurance is a proactive approach that aims to prevent errors by implementing standardized processes and validation metrics. In bioinformatics, data QA typically includes:- Raw data quality metrics (e.g., sequencing quality scores, read depth)

- Processing validation parameters (e.g., alignment rates, coverage uniformity)

- Analysis verification metrics (e.g., statistical validity measures, model performance indicators)

- Metadata completeness and accuracy measures

- Provenance tracking information

The Critical Importance of Quality Assurance in Bioinformatics

- Ensuring research reproducibility

- Enabling regulatory compliance

- Reducing costs and accelerating discovery

- Reduce the need for repeated analyses due to missed quality issues up front

- Minimize false discoveries that could lead to failed clinical trials

- Accelerate the drug discovery pipeline through more reliable target identification

- Increase confidence in research findings for stakeholders and investors

Key Components of Data Quality Assurance in Bioinformatics

- Raw data quality assessment

- Base call quality scores (Phred scores)

- Read length distributions

- GC content analysis

- Adapter content evaluation

- Sequence duplication rates

- Processing validation metrics

- Alignment rates and mapping quality

- Coverage depth and uniformity

- Variant quality scores

- Batch effect assessments

- Variance among replicates

- Technical artifact identification

- Analysis verification data

- Statistical significance measures (p-values, q-values)

- Effect size estimates

- Confidence intervals

- Model performance metrics (for machine learning applications)

- Cross-validation results

- Metadata and provenance tracking

Best Practices for Quality Assurance in Bioinformatics

- Standardization and automation

- Comprehensive documentation

- Experimental protocols

- Processing workflows with version information

- Analysis parameters and statistical methods

- Quality control decision points and criteria

- Validation with reference standards

- Independent verification

Challenges in Bioinformatics Quality Assurance

- Data volume and complexity

- Evolving technologies and methods

- Integration of multi-omics data

The Future of Quality Assurance in Bioinformatics

- AI-driven quality assessment

- Community-driven standards

Revolutionizing Life Sciences: The Power of Spatial Innovation in Bioinformatics

| Miscellaneous

The Emergence of Spatial Omics Technologies

Traditional omics technologies have provided valuable insights into biological systems, but they often lack spatial context. Bulk RNA sequencing, for instance, measures gene expression across entire tissue samples, obscuring the heterogeneity that exists at the cellular level. Single-cell sequencing, while able to detect expression patterns on a per cell basis, loses spatial context during sample preparation. Spatial omics technologies address this limitation by preserving and capturing spatial information during data collection. Spatial transcriptomics enables researchers to visualize and analyze gene expression patterns within their native tissue context. This technology combines high-resolution imaging with molecular profiling, allowing for the precise mapping of gene expression across tissue sections. According to a landmark study published in Science, spatial transcriptomics has revealed previously unrecognized cell populations and gene expression gradients in complex tissues such as the brain and tumors. Similarly, spatial proteomics technologies like multiplexed ion beam imaging (MIBI) and imaging mass cytometry (IMC) allow for the simultaneous visualization of dozens of proteins within tissue sections. These approaches have been instrumental in characterizing the tumor microenvironment and identifying novel therapeutic targets.Computational Challenges & Solutions

The integration of spatial information into bioinformatics workflows presents significant computational challenges. Spatial omics datasets are typically large and complex, requiring specialized algorithms and computational resources for analysis. Moreover, the integration of spatial data with other omics datasets necessitates sophisticated computational approaches. Several innovative computational methods have been developed to address these challenges:- Spatial statistics & pattern recognition

- Graph-based approaches

- Machine learning & deep learning

Applications in Biomedical Research & Clinical Practice

The integration of spatial context into bioinformatics has enabled numerous applications across various biomedical research fields and clinical practice areas:- Cancer research and precision oncology

- Neuroscience and neurological disorders

- Developmental biology and organogenesis

Future Directions & Emerging Trends

The field of spatial bioinformatics continues to evolve rapidly, with several emerging trends shaping its future:- Multi-modal integration

- Temporal dynamics

- AI-driven discovery

Master Data Engineering: Optimizing Life Sciences Data for Precision Insights

| Miscellaneous

Data Acquisition & Integration

In pharmaceutical and biotech industries, data is sourced from diverse origins, including clinical trials, genomic sequencing, and electronic health records. The challenge lies in integrating disparate datasets into a cohesive framework. Key considerations:- ETL (Extract, Transform, Load) pipelines - Effective ETL pipelines ensure raw data is extracted from various sources, transformed into a standardized format, and loaded into a central repository.

- APIs and data streaming - Organizations leverage APIs and real-time data streaming solutions to ingest dynamic data from wearables, laboratory systems, and public health databases.

- Interoperability standards - Adhering to standards such as HL7 (Health Level Seven) and FHIR (Fast Healthcare Interoperability Resources) facilitates seamless data exchange in healthcare systems.

Data Governance & Quality Management

Robust data governance frameworks are essential to maintaining high-quality, reliable datasets in life sciences research. Without proper oversight, inconsistencies, redundancies, and errors can compromise downstream research outcomes. Key considerations:- Data provenance - Establishing lineage tracking ensures data is sourced, processed, and analyzed with transparency.

- Regulatory compliance - Compliance with GDPR, HIPAA, and FDA 21 CFR Part 11 ensures data security and patient confidentiality in pharmaceutical and clinical research.

- Data validation techniques - Implementing automated data quality checks, including outlier detection and schema validation, minimizes errors and enhances reproducibility.

Scalability & Performance Optimization

With the exponential growth of biomedical and pharmaceutical data, scalability becomes a critical factor. Large-scale genomic datasets and high-throughput screening results require efficient processing and storage solutions. Key considerations:- Cloud computing - Cloud-based platforms like AWS, Google Cloud, and Azure enable scalable data storage and computing power for bioinformatics pipelines.

- Distributed processing - Frameworks such as Apache Spark and Hadoop facilitate parallel computing, accelerating data processing for genome sequencing and proteomics analysis.

- Database optimization - Choosing the right database architecture—whether SQL, NoSQL, or graph databases—improves query performance and data retrieval times.

Workflow Automation & Pipeline Orchestration

Automated workflows streamline the processing of complex datasets, ensuring reproducibility and efficiency in bioinformatics and pharmaceutical research. Key considerations:- Pipeline orchestration tools - Platforms like Apache Airflow and Nextflow automate multistep data workflows, from raw data ingestion to machine learning model training.

- Reproducibility - Version-controlled environments using tools like Docker and Kubernetes ensure consistency across research teams and institutions.

- CI/CD for data pipelines - Continuous integration and deployment (CI/CD) practices minimize errors in bioinformatics pipelines, enabling real-time data updates.

Data Security & Privacy

Given the sensitive nature of patient records, clinical trial data, and proprietary research, implementing stringent data security measures is nonnegotiable. Key considerations:- Encryption standards - Encrypting data at rest and in transit using AES-256 and TLS protocols protects against unauthorized access.

- Access controls - Role-based access control (RBAC) ensures only authorized personnel can access specific datasets.

- Anonymization techniques - De-identification of patient data using k-anonymity and differential privacy techniques balances data utility with privacy compliance.

Knowledge Mining & Target Profiling

Data engineering principles support advanced analytics techniques such as knowledge mining and target profiling, which are crucial in drug discovery and precision medicine. Key considerations:- AI-driven data curation - Machine learning algorithms extract meaningful insights from unstructured data sources, such as scientific literature and clinical notes.

- Entity resolution - Resolving ambiguous identifiers across datasets enhances data consistency in pharmacovigilance and biomarker research.

- Graph analytics - Graph databases facilitate the identification of complex biological relationships in omics data and drug-target interactions.

Sustainable Data Management & Cost Efficiency

Managing massive datasets in life sciences requires cost-effective solutions without compromising performance or security. Key considerations:- Cold vs. hot storage - Tiered storage solutions differentiate frequently accessed data from archival datasets, reducing storage costs.

- Data lifecycle management - Establishing retention policies ensures obsolete data is archived or deleted according to compliance guidelines.

- Serverless architectures - Serverless computing optimizes resource allocation, reducing operational expenses while maintaining efficiency.

The Future of Data Engineering in Life Sciences

As the demand for data-driven insights grows in pharmaceuticals, biotech, and healthcare, the principles of data engineering will continue to evolve. Organizations that adopt scalable, secure, and automated data solutions will be well positioned to drive innovation in drug development, precision medicine, and clinical research. Rancho Biosciences specializes in data curation, governance, bioinformatics services, and advanced analytics for pharmaceutical and biotech companies, research foundations, and healthcare institutions. Our expertise in building scalable data solutions empowers organizations to unlock the full potential of their data. Contact us today to learn how we can support your data engineering needs.Raw Data vs. Curated Data in Life Sciences: Why the Distinction Matters and the Future of AI in Generating Reliable Data Insights

| Miscellaneous

Raw Data: The Starting Point

Raw data refers to unprocessed, unstructured, and/or unfiltered information collected from experiments, clinical trials, sensors, or computational simulations. This data often includes noise, redundancies, and errors that require transformation before it becomes useful for analysis. Examples of raw data in life sciences include:- Genomic sequences from next-generation sequencing (NGS) technologies

- Mass spectrometry outputs from proteomics studies

- Electronic health records (EHRs) containing physician notes and diagnostic information

- Sensor readings from wearable medical devices

Curated Data: Structured & Refined for Analysis

Curated data, in contrast, is refined, standardized, and structured information that has undergone validation, annotation, and integration. Curation ensures data consistency, accuracy, and interoperability, making it easier to analyze and apply to research and decision-making processes. Examples of curated data in life sciences include:- Annotated genomic datasets with functional information linked to gene variants

- Standardized clinical trial results formatted according to regulatory guidelines

- Curated knowledge bases that integrate biomedical literature, experimental data, and computational models

Why the Distinction Matters in Life Sciences

The transformation of raw data into curated data has profound implications for various aspects of life sciences research and development:- Ensuring data quality and integrity

- Facilitating regulatory compliance

- Enhancing bioinformatics analysis and predictive modeling

- Improving reproducibility and collaboration

- Reducing time and costs in research and development

- Accelerating drug discovery

- Enhancing clinical trial design and execution

- Advancing precision medicine

- Facilitating bioinformatics analysis

Challenges in Data Curation & Management

Despite its advantages, data curation poses several challenges:- Scalability - The exponential growth of biomedical data requires scalable curation solutions that balance automation and expert oversight.

- Interoperability - Integrating diverse datasets from different sources (e.g., patient records and omics and imaging data) requires adherence to common standards.

- Data security and privacy - Handling sensitive patient data necessitates compliance with regulations like HIPAA and GDPR.

The Future of Data Curation in Life Sciences

As the volume and complexity of life sciences data continue to grow, the importance of effective data curation will only increase. Several trends are shaping the future of this field:- AI-assisted curation - Machine learning, natural language processing, and large language model (LLM) technologies are being developed to automate aspects of the curation process, improving efficiency and scalability.

- Collaborative curation - Open-source platforms and community-driven efforts are emerging to facilitate collaborative curation across institutions and disciplines.

- Real-time curation - Advanced technologies are enabling more rapid curation of data, moving toward real-time processing and integration of new information.

- Semantic integration - The development of ontologies and knowledge graphs is enhancing the ability to integrate diverse datasets at a semantic level, improving the contextual understanding of curated data.

Revolutionizing Diagnostics: Cutting-Edge Techniques in Digital Pathology

| Miscellaneous

The Foundation: Whole Slide Imaging (WSI)

At the heart of digital pathology lies Whole Slide Imaging (WSI), a groundbreaking technique that digitizes entire glass slides containing tissue samples. WSI utilizes advanced scanners to capture high-resolution digital reproductions of slides, preserving intricate cellular structures and subtle nuances with remarkable fidelity. Key features of WSI:- Accurate digital replication of physical slides

- Multi-magnification viewing capabilities

- Enhanced accessibility and sharing of specimen data

AI-Assisted Analysis: Enhancing Diagnostic Capabilities

The integration of artificial intelligence (AI) with digital pathology has ushered in a new era of diagnostic precision and efficiency. AI algorithms can analyze vast datasets, identify complex patterns, and assist pathologists in efficiency and making more accurate diagnoses. AI applications in digital pathology:- Image recognition for cellular anomalies

- Predictive modeling for disease progression

- Automated quantification of biomarkers

Image Analysis Software

Sophisticated image analysis software is a crucial component of digital pathology systems. These tools offer a wide range of functionalities that enhance the pathologist’s ability to interpret and analyze digital slides. Key features of image analysis software:- Automated cell counting and classification

- Tissue segmentation and region of interest (ROI) identification

- Quantitative analysis of biomarker expression

Cloud-Based Collaboration and Storage

The shift toward digital pathology has facilitated unprecedented levels of collaboration among pathologists and researchers. Cloud-based platforms enable secure sharing of digital slides and associated data across institutions and geographical boundaries. Benefits of cloud-based systems:- Real-time collaboration on challenging cases

- Centralized storage of large volumes of pathology data

- Enhanced accessibility for remote consultations

Integration with Laboratory Information Systems (LIS)

Seamless integration between digital pathology systems and laboratory information systems (LIS) is crucial for streamlining workflows and ensuring data integrity. This integration allows for efficient management of patient information, specimen tracking, and results reporting. Advantages of LIS integration:- Reduced manual data entry and associated errors

- Improved traceability of specimens throughout the diagnostic process

- Enhanced efficiency in reporting and communication of results

3D Pathology: The Next Frontier

While still in its early stages, three-dimensional (3D) pathology represents an exciting frontier in digital pathology techniques. By creating 3D reconstructions of tissue samples, pathologists can gain new insights into tissue architecture and disease progression. Potential applications of 3D pathology:- Enhanced visualization of tumor microenvironments

- Improved understanding of complex anatomical relationships

- More accurate staging of cancers

Challenges and Considerations

While digital pathology offers numerous advantages, its implementation isn’t without challenges. Healthcare institutions and research organizations must carefully consider several factors when adopting these technologies. Key considerations:- Data security and patient privacy protection

- Regulatory compliance and validation of digital systems

- Training and adaptation of pathologists to digital workflows

The Future of Digital Pathology

The field of digital pathology is rapidly evolving, with new techniques and applications emerging regularly. As AI and machine learning technologies continue to advance, we can expect even more sophisticated analysis capabilities and decision support tools for pathologists. Emerging trends:- Integration of genomic data with digital pathology images

- Development of AI-powered predictive models for treatment response

- Expansion of digital pathology into new areas of medical research

Unveiling Hidden Insights: The Power of Data Mining

| Miscellaneous

The Foundations of Knowledge Discovery in Databases

Data mining is a crucial step in the broader process of Knowledge Discovery in Databases (KDD). This multistep process involves extracting useful information from large volumes of data and transforming it into comprehensible structures for further use. The KDD process typically includes data selection, preprocessing, transformation, data mining, and interpretation/evaluation of the results.Types of Insights Produced by Data Mining

Association Rules

Association rule learning is a powerful data mining technique that searches for relationships between variables in large datasets. In the pharmaceutical industry, this method can be applied to:- Identify drug-drug interactions and potential side effects

- Discover correlations between genetic markers and drug responses

- Analyze patient purchasing habits for targeted marketing strategies

Classification Models

Classification is the task of generalizing known structures to apply to new data. In the context of pharmaceutical and biotech research, classification models can be used to:- Predict drug efficacy based on molecular structures

- Categorize patients into risk groups for personalized treatment plans

- Identify potential drug candidates from large compound libraries

Clustering Patterns

Clustering techniques aim to discover groups and structures in data that are similar in some way. In pharmaceutical research, clustering can be applied to:- Group patients with similar genetic profiles for targeted therapies

- Identify subpopulations that respond differently to treatments

- Cluster chemical compounds with similar properties for lead optimization

Predictive Models

Regression analysis and other predictive modeling techniques allow researchers to estimate relationships among data or datasets. In the pharmaceutical industry, these models can be used to:- Predict drug absorption, distribution, metabolism, and excretion (ADME) properties

- Forecast clinical trial outcomes based on patient characteristics

- Estimate market demand for new drugs

Anomaly Detection

Identifying unusual data records or patterns that deviate from the norm is crucial in pharmaceutical research and development. Anomaly detection can be applied to:- Detect adverse drug reactions in post-marketing surveillance

- Identify potential fraud or errors in clinical trial data

- Monitor manufacturing processes for quality control

Applications of Data Mining Knowledge in Pharmaceutical and Biotech Industries

Drug Discovery and Design

Data mining techniques play a crucial role in accelerating the drug discovery process. By analyzing vast chemical libraries and biological databases, researchers can:- Identify potential drug targets

- Predict drug-target interactions

- Optimize lead compounds for desired properties

Clinical Trial Optimization

The knowledge produced through data mining can greatly enhance the efficiency and success rate of clinical trials. By analyzing historical trial data and patient information, researchers can:- Optimize patient recruitment strategies

- Predict trial outcomes and identify potential risks

- Design more efficient and targeted trial protocols

Personalized Medicine

Data mining techniques are instrumental in advancing the field of personalized medicine. By analyzing large-scale genomic and clinical data, researchers can:- Identify genetic markers associated with drug response

- Develop targeted therapies for specific patient subgroups

- Predict individual patient outcomes and treatment efficacy

Pharmacovigilance and Drug Safety

Post-marketing surveillance of drugs is critical for ensuring patient safety. Data mining techniques applied to large-scale adverse event databases can:- Detect previously unknown drug side effects

- Identify drug-drug interactions

- Monitor the long-term safety profile of medications

Manufacturing and Quality Control

Data mining can also improve pharmaceutical manufacturing processes and quality control. By analyzing production data, companies can:- Optimize manufacturing parameters for consistent product quality

- Predict and prevent equipment failures

- Identify factors affecting product stability and shelf life

The Role of Bioinformatics Services

The integration of bioinformatics services with data mining techniques has become increasingly important in pharmaceutical and biotech research. These services provide specialized tools and expertise for analyzing complex biological data, including genomic sequences, protein structures, and metabolic pathways. By combining bioinformatics with advanced data mining algorithms, researchers can gain deeper insights into biological systems and accelerate the drug discovery process. To successfully apply these bioinformatic approaches, high-quality data can be identified from the public domain, which can be combined with internal data to answer specific questions. In many cases, this data must be harmonized to allow maximum utilization. The identification and harmonization of data can be tackled by skilled curation services. Data mining has become an indispensable tool in the pharmaceutical and biotech industries, producing various types of knowledge that drive innovation and improve decision-making processes. From drug discovery and clinical trial optimization to personalized medicine and pharmacovigilance, the insights gained through data mining techniques are transforming the way we develop and deliver healthcare solutions for better patient outcomes. Are you looking to harness the power of data mining and bioinformatics to accelerate your pharmaceutical or biotech research? Rancho Biosciences offers comprehensive data curation, knowledge mining, and bioinformatics analysis services tailored to your specific needs. Our team of experts can help you unlock the hidden potential in your data, driving innovation and improving decision-making across your organization. Contact us today to learn how we can support your research goals and help you stay at the forefront of scientific discovery.CDISC Standards: A Crucial Requirement for FDA Submissions in Clinical Research

| Miscellaneous

The FDA’s Stance on CDISC Standards

The FDA has been working closely with CDISC since its inception to develop and implement data standards that facilitate the regulatory review process. As a result, CDISC standards have become mandatory for all clinical studies submitted to the FDA since December 2016. This requirement underscores the importance of CDISC standards in the pharmaceutical industry and their role in expediting the drug approval process.Key CDISC Standards Required by the FDA

Since December 17, 2016, the FDA has required the use of CDISC standards for new drug applications (NDAs), biologics license applications (BLAs), and abbreviated new drug applications (ANDAs) involving studies initiated after December 17, 2014. This requirement is outlined in the FDA’s Study Data Standards Resources, which include:- Study Data Technical Conformance Guide (TCG) - A resource that provides technical recommendations for preparing and submitting standardized study data to the FDA

- Data Standards Catalog – A resource that specifies which versions of data standards and terminologies are supported by the FDA for use in regulatory submissions

- Study Data Tabulation Model (SDTM) - A standard for clinical data in tabular form, designed to facilitate data aggregation and sharing

- Analysis Data Model (ADaM) - A standard for analysis-ready datasets derived from SDTM

- CDASH (Clinical Data Acquisition Standards Harmonization) - A standard for the capture of clinical data, optimized for ease of collection, data quality, and subsequent transformation into SDTM format

- SEND (Standard for Exchange of Nonclinical Data) - A standard for preclinical data, such as toxicology studies in animals

Benefits of CDISC Standards in Clinical Research

The implementation of CDISC standards offers numerous advantages to the pharmaceutical and biotech industries:- Increased efficiency

- Enhanced data quality

- Facilitated data sharing

Implementing CDISC Standards: Challenges and Solutions

Adopting CDISC standards can present challenges for organizations, particularly those with established data management processes:- High initial costs

- Complexity of data transformation

- Keeping up with updates

- Early planning

- Leveraging technology

- Continuous training

- Collaboration with experts

The Future of CDISC Standards and FDA Requirements

As the field of clinical research continues to evolve, especially with the potentially transformational role of AI in drug development, CDISC standards are likely to adapt and expand. The FDA has demonstrated an ongoing commitment to data standardization, as evidenced by its Data Standards Strategy for fiscal years 2023–2027. This strategy emphasizes the continued importance of CDISC standards in regulatory submissions and highlights areas for future development. Some potential areas of growth for CDISC standards include:- Integration with real-world data sources

- Adaptation to emerging therapeutic areas, such as gene and cell therapies

- Enhanced support for patient-reported outcomes and wearable device data

Unlocking Data Potential: Understanding FAIR vs Open Data in Life Sciences

| Miscellaneous

Understanding FAIR Data

FAIR data is a set of guiding principles for scientific data management and stewardship. Their aim is to optimize data management to enable machines and humans to effectively find, access, and utilize data. The acronym FAIR stands for Findable, Accessible, Interoperable, and Reusable. These principles were developed to support the reusability of digital assets, addressing the increasing volume and complexity of data generated in modern research.- Findable

- Assigning unique and persistent identifiers to datasets and entities

- Providing rich metadata that describes the data in detail

- Registering or indexing the data in a searchable resource

- The data is retrievable by its identifier using a standardized protocol

- The protocol is open, free, and universally implementable

- The metadata remains accessible even when the data is no longer available

- Using a shared and broadly applicable language for knowledge representation

- Utilizing standard vocabularies that follow FAIR principles

- Including qualified references to other data

- Having clear and accessible data usage licenses

- Providing detailed data provenance information

- Meeting domain-relevant community standards

Benefits of FAIR Data in Life Sciences

Pharmaceutical and biotech companies rely heavily on FAIR data to:- Streamline data sharing across international research teams

- Reduce duplication of effort by making datasets reusable

- Facilitate machine learning and artificial intelligence in drug discovery

- Ensure regulatory compliance by maintaining detailed data provenance

Open Data: A Different Approach

Open data, on the other hand, focuses on making data freely available to everyone to use and republish without restrictions. Open data is rooted in the ideas of transparency, collaboration, and unrestricted sharing to promote innovation and societal benefit. The key characteristics of open data include:- Availability and access - The data must be freely accessible to all, preferably by downloading over the internet without paywalls or complex permissions, at no more than a reasonable reproduction cost.

- Reuse and redistribution - There are no legal or technical restrictions on how the data can be utilized. The data must be provided under terms that permit reuse and redistribution, including the intermixing with other datasets.

- Transparency - Open data emphasizes full transparency in its collection, processing, and sharing.

Benefits of Open Data in Life Sciences

In the life sciences sector, open data has been instrumental in:- Accelerating research by providing unrestricted access to key datasets such as The Cancer Genome Atlas (TCGA)

- Enhancing reproducibility by making data available for validation studies

- Promoting public trust in science by ensuring transparency in research findings

Key Differences between FAIR and Open Data

While FAIR data and open data share some common goals, they differ in several important aspects:- Accessibility requirements

- Focus on machine readability

- Metadata and documentation

- Interoperability standards

| Aspect | FAIR Data | Open Data |

|---|---|---|

| Accessibility | Can be open or restricted based on use case | Always open to all |

| Focus | Ensures data is machine-readable and reusable | Promotes unrestricted sharing and transparency |

| Licensing | Varies—can include access restrictions | Typically utilizes open licenses like Creative Commons |

| Primary Users | Designed for researchers, institutions, and machines | Designed for public and scientific communities |

| Application | Ideal for structured data integration in R&D | Ideal for democratizing access to large datasets |

Implications for Life Sciences Industries

The distinction between FAIR and open data has significant implications for pharmaceutical, biotech, and healthcare industries:- Data sharing in collaborative research

- Clinical trial data management

- Genomics and bioinformatics

- Regulatory compliance

Integrating FAIR and Open Data Strategies

In many cases, life sciences organizations find value in combining FAIR and open data principles. For example:- A biotech company might use FAIR principles to manage its proprietary datasets while contributing anonymized, aggregated data to open repositories for public benefit.

- Government-funded research institutions often follow FAIR principles internally and publish open data externally to comply with transparency mandates.

The Future of Data Management in Life Sciences

As the life sciences continue to generate increasingly complex and voluminous data, the principles of FAIR data are likely to become even more critical. While open data will continue to play an important role, particularly in publicly funded research, the nuanced approach of FAIR data is better suited to the complex needs of the pharmaceutical and biotech industries. By adopting FAIR data principles, companies can:- Enhance the value of their data assets

- Improve collaboration and data sharing

- Accelerate the pace of discovery and innovation

- Ensure better compliance with regulatory requirements

- Increase the reproducibility of research findings

Role of Data Curation and Bioinformatics Services

Organizations operating in life sciences can overcome these challenges by partnering with specialized bioinformatics service providers like Rancho Biosciences, which offers:- Data curation and governance - Ensuring datasets adhere to FAIR principles while meeting regulatory standards

- Custom workflows and pipelines - Designing interoperable systems for seamless data integration

- Knowledge mining and database building - Extracting actionable insights from both proprietary and open data

The Science of Safety: Understanding Toxicology in Biomedical Research

| Miscellaneous

The Fundamentals of Toxicology

At its core, toxicology is the study of the adverse effects of chemicals or physical agents on living organisms. This discipline encompasses a wide range of research areas, including:- Mechanistic toxicology

- Regulatory toxicology

- Environmental toxicology

- Clinical toxicology

Toxicology in Drug Development

The pharmaceutical industry relies heavily on toxicological studies to ensure the safety of new drugs before they reach clinical trials. This process involves several key stages:- Preclinical safety assessment

- Identify potential target organs for toxicity

- Determine safe starting doses for human trials

- Predict potential side effects

- Establish safety margins between therapeutic and toxic doses

- Toxicokinetics and pharmacokinetics

- Determine the relationship between drug dose and toxic effects

- Identify potential drug-drug interactions

- Optimize dosing regimens to minimize toxicity while maintaining efficacy

- Genotoxicity and carcinogenicity testing

- In vitro mutagenicity assays (e.g., Ames test)

- In vivo chromosomal aberration studies

- Long-term carcinogenicity studies in rodents

Emerging Trends in Toxicology

The field of toxicology is continuously evolving, incorporating new technologies and approaches to enhance safety assessments. Some notable trends include:- Toxicogenomics

- Identify biomarkers of toxicity

- Predict potential adverse effects based on gene expression patterns

- Understand individual susceptibility to specific substances

- 3D cell culture models

- Better prediction of human toxicity compared to traditional 2D cell cultures

- Reduced reliance on animal testing

- Improved understanding of organ-specific toxicity mechanisms

- Artificial intelligence and machine learning

- Rapid screening of large chemical libraries for potential toxicity

- Identification of complex patterns in toxicological data

- Development of more accurate in silico toxicity prediction models

Regulatory Landscape and Toxicology

Toxicological studies are essential for meeting regulatory requirements and ensuring public safety. Key regulatory bodies and guidelines include:- FDA (Food and Drug Administration) - Oversees drug safety in the United States

- EMA (European Medicines Agency) - Regulates medicines in the European Union

- ICH (International Council for Harmonisation) - Provides global guidelines for drug development and safety assessment

- Good Laboratory Practice (GLP) regulations

- Specific guidelines for different types of toxicity studies (e.g., reproductive toxicity, chronic toxicity)

- Requirements for toxicology data submission in regulatory dossiers

Challenges and Future Directions

Despite significant advancements, toxicology in biomedical science faces several challenges:- Translating animal data to humans

- Humanized animal models

- Advanced in vitro systems that better mimic human physiology

- Integrative approaches combining multiple data sources

- Assessing multiple-component toxicity

- Developing models to predict multiple-component toxicity

- Studying additive, synergistic ,and antagonistic interactions between compounds

- Assessing cumulative exposure risks in environmental and occupational settings

- Addressing emerging contaminants

- Develop specialized testing methods for novel substances

- Understand long-term effects of chronic low-dose exposures

- Assess potential environmental impacts of emerging contaminants

Understanding Data Integrity: The Backbone of Biomedical Research

| Miscellaneous

What Is Data Integrity in Biomedical Research?

Data integrity in biomedical research refers to the accuracy, completeness, consistency, and reliability of data throughout its lifecycle. It encompasses the entire process of data collection, storage, analysis, and reporting. In essence, data integrity ensures the information used in research is trustworthy, traceable, and can withstand scrutiny.Core Principles of Data Integrity

The ALCOA+ framework, widely recognized within the scientific community, defines the fundamental principles of data integrity as follows:- Attributable - All data should be traceable to its origin, including who created it and when.

- Legible - Data must be readable and permanently recorded.

- Contemporaneous - Data should be recorded at the time of generation.

- Original - Primary records should be preserved and any copies clearly identified.

- Accurate - Data should be free from errors and reflect actual observations.

- Complete - All data, including metadata, should be present.

- Consistent - Data should align across different sources and versions.

- Enduring - Data should be preserved in a lasting format.

- Available - Data should be readily accessible for review and audit.

The Importance of Data Integrity

- Ensuring research reliability and reproducibility

- Safeguarding patient safety

- Regulatory compliance

Challenges to Data Integrity in Biomedical Research

- Data volume and complexity

- Data heterogeneity

- Human error and bias

- Cybersecurity threats

Best Practices for Ensuring Data Integrity

- Implement robust data management systems

- Audit trails to track all data modifications

- Version control to manage changes over time

- Access controls to prevent unauthorized data manipulation

- Data validation checks to ensure accuracy and completeness

- Standardize data collection and documentation

- Using validated instruments and methods

- Implementing standardized data formats and terminologies

- Maintaining detailed metadata to provide context for the data

- Conduct regular data quality assessments

- Regular data audits to identify and correct errors

- Statistical analyses to detect anomalies or outliers

- Cross-validation of data from different sources

- Invest in training and education

- Offering regular training sessions on data management and integrity

- Fostering a culture of data quality and ethical research practices

- Providing clear guidelines and standard operating procedures

- Leverage advanced technologies

- Artificial intelligence and machine learning for automated data validation and anomaly detection

- Blockchain technology for creating immutable data records

- Natural language processing for extracting structured data from unstructured sources

The Role of Data Management Professionals in Ensuring Data Integrity

Firms that specialize in biomedical data services play a crucial role in helping pharmaceutical, biotech, and research organizations uphold the highest standards of data integrity. Look for a company with a comprehensive suite of services that includes:- Data curation and standardization

- Quality control and validation

- Advanced analytics and data integration

- Custom data management solutions

Navigating the Data Jungle: How Ontology & Taxonomy Shape Biomedical Research

| Miscellaneous

What Is Data Taxonomy?

Definition & Key Characteristics Data taxonomy is a hierarchical classification system that organizes information into categories and subcategories based on shared characteristics. In the context of biomedical research, taxonomies provide a structured way to classify entities such as diseases, drugs, and biological processes. Key characteristics of taxonomies include:- Hierarchical structure

- Clear parent-child relationships

- Comprehensive coverage of a domain

- Drug classification - Organizing pharmaceuticals based on their chemical structure, mechanism of action, or therapeutic use

- Disease classification - Categorizing diseases according to their etiology, affected body systems, or pathological features

- Biological classification - Organizing living organisms based on their evolutionary relationships and shared characteristics

- Simplicity and ease of understanding

- Efficient navigation of large datasets

- Clear categorization for data retrieval

- Rigidity in structure

- Difficulty in representing complex relationships

- Limited ability to capture cross-category connections

What Is Data Ontology?

Definition & Key Characteristics Data ontology is a more complex and flexible approach to data organization. It represents a formal explicit specification of a shared conceptualization within a domain. Unlike taxonomies, ontologies capture not only hierarchical relationships but also other types of associations between concepts. Key characteristics of ontologies include:- Rich relationship types

- Formal logic and rules

- Machine-readable format

- Cross-domain integration capabilities

- Knowledge representation - Capturing complex biomedical concepts and their interrelationships

- Data integration - Facilitating the integration of diverse datasets from multiple sources

- Semantic reasoning - Enabling automated inference and discovery of new knowledge

- Natural language processing - Supporting the extraction of meaningful information from unstructured text

- Ability to represent complex relationships

- Support for automated reasoning and inference

- Flexibility in accommodating new knowledge

- Enhanced interoperability between different data sources

- Higher complexity and steeper learning curve

- Increased resource requirements for development and maintenance

- Potential for inconsistencies in large collaborative ontologies

Key Differences between Taxonomy & Ontology

Although both data ontology and taxonomy aim to organize data, the key differences between them lie largely in their structure, complexity, use cases, and more. Determining whether you’re working with a taxonomy or an ontology can sometimes be challenging, as both systems organize information and some of the same tools may be used for viewing both.| Aspect | Data Taxonomy | Data Ontology | ||||

| Structure | Hierarchical tree-like structure with clear parent-child relationships | Relational, capturing complex interconnections between entities | ||||

| Complexity |

|

|

||||

| Flexibility |

|

|

||||

| Use Case |

|

|

||||

| Industry Example |

|

Understanding gene-disease-drug relationships in genomics research | ||||

| Inference Capabilities | Limited to hierarchical inferences | Supports complex reasoning and automated inference | ||||

| Interoperability | Generally domain-specific | Facilitates cross-domain integration and knowledge sharing |

Why These Differences Matter in Life Sciences & Biotech

In life sciences and biotech, data isn’t just increasing in volume but also in complexity. The ability to extract meaningful insights from data is critical for drug discovery, patient treatment, and regulatory compliance. Knowing when to use a taxonomy versus an ontology can greatly affect the quality and efficiency of data governance and analysis. For instance, taxonomies can help with organizing large datasets in clinical research, making it easier for teams to categorize patient data, drugs, and treatment outcomes. However, when the goal is to understand how a drug interacts with different biological pathways or to predict patient responses based on genetic profiles, ontologies become essential. By mapping complex relationships, ontologies provide the deep contextual understanding required to drive precision medicine and personalized treatments.Choosing between Taxonomy & Ontology in Biomedical Research

The decision to use a taxonomy or ontology depends on several factors: When to Use Taxonomy- For simple hierarchical classification of well-defined entities

- When rapid development and deployment are priorities

- In scenarios where user-friendly navigation is crucial

- For projects with limited resources or expertise in ontology development

- For representing complex biomedical knowledge with intricate relationships

- When integrating diverse datasets from multiple sources

- In projects requiring automated reasoning and knowledge discovery

- For long-term collaborative efforts in knowledge representation

Case Studies: Taxonomy & Ontology in Action

Case Study 1: Gene Ontology (GO) The Gene Ontology (GO) project is a prominent example of ontology application in biomedical research. GO provides a comprehensive standardized vocabulary for describing gene and gene product attributes across species and databases. It consists of three interrelated ontologies:- Molecular function

- Biological process

- Cellular component

- Annotate genes and gene products with standardized terms

- Perform enrichment analyses to identify overrepresented biological processes in gene sets

- Integrate and compare genomic data across different species and experiments

- Anatomical main group

- Therapeutic subgroup

- Pharmacological subgroup

- Chemical subgroup

- Chemical substance

- Standardized drug classification across different countries and healthcare systems

- Efficient drug utilization studies and pharmacoepidemiological research

- Clear organization of pharmaceutical products for regulatory purposes

The Future of Data Organization in Biomedical Research

As biomedical research continues to generate vast amounts of complex data, the importance of effective data organization tools will only grow. Future developments in this field may include:- AI-driven ontology development - Leveraging artificial intelligence to assist in the creation and maintenance of large-scale biomedical ontologies

- Enhanced interoperability - Developing standards and tools to facilitate seamless integration between different ontologies and taxonomies

- Real-time knowledge graphs - Creating dynamic, self-updating knowledge representations that evolve with new scientific discoveries

- Personalized medicine applications - Utilizing ontologies to integrate diverse patient data for more precise diagnosis and treatment selection

Integration of Taxonomy & Ontology in Data Governance

While taxonomy and ontology serve different purposes, they’re not mutually exclusive. In fact, combining both approaches within a data governance framework can offer significant advantages. A well-defined taxonomy can provide the foundation for organizing data, while an ontology can overlay this structure with relationships and semantic connections, enabling more advanced data analysis and integration. Pharmaceutical and biotech companies increasingly rely on this integration to manage their vast data assets. For example, during drug development, taxonomies can organize preclinical and clinical data, while ontologies integrate this data with real-world evidence, such as electronic health records or genomic data, to identify new drug targets and predict adverse reactions. In the complex world of biomedical research and pharmaceutical development, both taxonomies and ontologies play vital roles in organizing and leveraging scientific knowledge. While taxonomies offer simplicity and ease of use for straightforward classification tasks, ontologies provide the depth and flexibility needed to represent intricate biomedical concepts and relationships. By understanding the strengths and limitations of each approach, researchers and data scientists can make informed decisions about how to structure their data effectively. As the life sciences continue to advance, the thoughtful application of these data organization techniques will be crucial in unlocking new insights, accelerating drug discovery, and ultimately improving patient outcomes. Are you ready to optimize your data organization strategy? Rancho Biosciences offers expert consultation and implementation services for both taxonomies and ontologies tailored to your specific research needs. Our team of experienced bioinformaticians and data scientists can help you navigate the complexities of biomedical data management, ensuring you leverage the most appropriate tools for your projects. Contact Rancho Biosciences today to schedule a consultation and discover how we can enhance your data organization capabilities, streamline your research processes, and accelerate your path to innovation, discovery, and scientific breakthroughs. Our bioinformatics services and expertise can propel your projects to new heights. Don’t miss the opportunity to take your data-driven endeavors to the next level.Unlocking the Secrets of Life: A Deep Dive into Single-Cell Bioinformatics

| Miscellaneous

Single-Cell Bioinformatics Defined

At its core, single-cell bioinformatics is a multidisciplinary field that combines biology, genomics, and computational analysis to investigate the molecular profiles of individual cells. Unlike conventional approaches that analyze a population of cells together, single-cell bioinformatics allows researchers to scrutinize the unique characteristics of each cell, offering unprecedented insights into cellular diversity, function, and behavior.The Power of Single-Cell Analysis

Unraveling Cellular Heterogeneity

One of the key advantages of single-cell bioinformatics is its ability to unveil the intricacies of cellular heterogeneity. In a population of seemingly identical cells, there can be subtle yet crucial differences at the molecular level. Single-cell analysis enables scientists to identify and characterize these variations, providing a more accurate representation of the true biological landscape.Mapping Cellular Trajectories

Single-cell bioinformatics goes beyond static snapshots of cells, allowing researchers to track and understand dynamic processes such as cell differentiation and development. By analyzing gene expression patterns over time, scientists can construct cellular trajectories, revealing the intricate paths cells take as they evolve and specialize.The Workflow of Single-Cell Bioinformatics

Cell Isolation & Preparation

The journey begins with the isolation of individual cells from a tissue or sample. Various techniques, including fluorescence-activated cell sorting (FACS) and microfluidics, are employed to isolate single cells while maintaining their viability. Once isolated, the cells undergo meticulous preparation to extract RNA, DNA, or proteins for downstream analysis.High-Throughput Sequencing

The extracted genetic material is subjected to high-throughput sequencing, generating vast amounts of data. This step is crucial for capturing the molecular profile of each cell accurately. Advances in sequencing technologies, such as single-cell RNA sequencing (scRNA-seq) and single-cell DNA sequencing (scDNA-seq), have played a pivotal role in the success of single-cell bioinformatics.Computational Analysis

The real power of single-cell bioinformatics lies in its computational prowess. Analyzing the massive datasets generated during sequencing requires sophisticated algorithms and bioinformatics tools. Researchers employ various techniques, including dimensionality reduction, clustering, and trajectory inference, to make sense of the complex molecular landscapes revealed by single-cell data.Applications Across Biology & Medicine

Advancing Cancer Research

Single-cell bioinformatics has revolutionized cancer research by providing a detailed understanding of tumor heterogeneity. This knowledge is crucial for developing targeted therapies tailored to the specific molecular profiles of individual cancer cells, ultimately improving treatment outcomes.Neuroscience Breakthroughs

In neuroscience, single-cell analysis has shed light on the complexity of the brain, unraveling the diversity of cell types and their functions. This knowledge is instrumental in deciphering neurological disorders and developing precise interventions.Precision Medicine & Therapeutics

The ability to analyze individual cells has immense implications for precision medicine. By considering the unique molecular characteristics of each patient’s cells, researchers can tailor treatments to maximize efficacy and minimize side effects.Challenges & Future Directions

While single-cell bioinformatics holds immense promise, it’s not without challenges. Technical complexities, cost considerations, and the need for standardized protocols are among the hurdles researchers face. However, ongoing advancements in technology and methodology are gradually overcoming these obstacles, making single-cell analysis more accessible and robust. Looking ahead, the future of single-cell bioinformatics holds exciting possibilities. Integrating multi-omics data, improving single-cell spatial profiling techniques, and enhancing computational tools will further elevate the precision and depth of our understanding of cellular biology. As we navigate the frontiers of biological research, single-cell bioinformatics stands out as a transformative force, unlocking the secrets encoded within the microscopic realms of individual cells. From personalized medicine to unraveling the complexities of diseases, the applications of single-cell analysis are vast and promising. As technology advances and researchers continue to refine their methods, the insights gained from single-cell bioinformatics will undoubtedly shape the future of biology and medicine, offering a clearer and more detailed picture of life at the cellular level. If you’re looking for a reliable and experienced partner to help you with your data science projects, look no further than Rancho BioSciences. We’re a global leader in data curation, analysis, and visualization for life sciences and healthcare. Our team of experts can handle any type of data, from NGS data analysis to genomics and clinical trials, and deliver high-quality results in a timely and cost-effective manner. Whether you need to clean, annotate, integrate, visualize, or interpret your data, Rancho BioSciences can provide you with customized solutions that meet your specific needs and goals. Contact us today to find out how we can help you with your data science challenges.Single-Cell Sequencing: Unlocking the Secrets of Cellular Heterogeneity in Biomedical Research

| Miscellaneous

Understanding Single-Cell Sequencing

Before exploring specific examples, it’s crucial to grasp the fundamental concept of single-cell sequencing. This advanced technique allows researchers to analyze the genetic material of individual cells, providing a high-resolution view of cellular diversity within a sample. Unlike traditional bulk sequencing methods, whose output is the average across many cells, single-cell sequencing provides the unique transcriptional profiles of each cell, offering invaluable insights into cellular function, developmental trajectories, and disease mechanisms.A Paradigm-Shifting Example: Single-Cell RNA Sequencing in Cancer Research

One of the most impactful applications of single-cell sequencing lies in the field of oncology. Let’s explore a groundbreaking example that demonstrates the power of this technology in revolutionizing cancer research and treatment strategies.The Study: Unraveling Intratumor Heterogeneity

In a landmark study published in Cell, researchers employed single-cell RNA sequencing (scRNA-seq) to investigate intratumor heterogeneity in glioblastoma, an aggressive form of brain cancer. This publication exemplifies how single-cell sequencing can provide crucial insights into tumor composition and evolution, with far-reaching implications for targeted therapies and personalized medicine.Methodology & Findings

The research team collected tumor samples from 13 patients diagnosed with glioblastoma. Using the 10x Genomics Chromium platform, they performed scRNA-seq on over 24,000 individual cells isolated from these tumors. This high-throughput approach allowed for an unprecedented level of resolution in analyzing the transcriptomes of cancer cells and the tumor microenvironment (TME). Key findings from this study include:- Cellular diversity - The researchers identified multiple distinct cell populations within each tumor, including malignant cells, immune cells, and stromal cells. This heterogeneity was previously undetectable using bulk sequencing methods.

- Transcriptional programs - By analyzing gene expression patterns at the single-cell level, the team uncovered specific transcriptional programs associated with different cellular states, including proliferation, invasion, and stemness.

- Tumor evolution - The study revealed insights into the evolutionary trajectories of glioblastoma cells, identifying potential mechanisms of treatment resistance and tumor recurrence.

- Immune microenvironment - Single-cell sequencing allowed for a detailed characterization of the tumor immune microenvironment, providing valuable information for developing immunotherapy strategies.

Implications for Drug Discovery & Personalized Medicine

This example of single-cell sequencing in glioblastoma research demonstrates the transformative potential of this technology for the pharmaceutical and biotech industries:- Target identification - By uncovering specific cellular subpopulations and the associated molecular signatures that set them apart, single-cell sequencing enables the identification of novel therapeutic targets for drug development.

- Precision medicine - The ability to characterize individual tumors at the cellular level supports the development of personalized treatment strategies tailored to each patient’s unique tumor composition.

- Drug resistance mechanisms - Single-cell analysis provides insights into the mechanisms of drug resistance, allowing researchers to design more effective combination therapies and overcome treatment challenges.

- Biomarker discovery - The high-resolution data obtained from single-cell sequencing facilitates the identification of biomarkers for early disease detection, treatment response prediction, and patient stratification.

Expanding Horizons: Single Cell-Sequencing across Biomedical Research

While the glioblastoma study serves as a powerful example, the applications of single-cell sequencing extend far beyond cancer research. Let’s explore how this technology is transforming other areas of biomedical research and drug development.Immunology & Autoimmune Disorders

Single-cell sequencing has revolutionized our understanding of the immune system’s complexity. For instance, one study published in the journal Nature Immunology used scRNA-seq analysis to characterize the heterogeneity of immune cells in rheumatoid arthritis patients, revealing novel cellular subtypes and potential therapeutic targets. This approach is enabling the development of more targeted immunotherapies and personalized treatments for autoimmune disorders.Neurodegenerative Diseases

In the field of neuroscience, single-cell sequencing is unraveling the intricate cellular landscape of the brain. Researchers have used this technology to create comprehensive atlases of brain cell types and identify specific neuronal populations affected in diseases like Alzheimer’s and Parkinson’s. These insights are crucial for developing targeted therapies and early diagnostic tools for neurodegenerative disorders.Developmental Biology & Regenerative Medicine

Single-cell sequencing is providing unprecedented insights into embryonic development and cellular differentiation. For example, a study published in Nature used scRNA-seq to map the entire process of embryonic organ development in mice. This knowledge is invaluable for advancing regenerative medicine approaches and developing stem cell-based therapies.Infectious Diseases

The COVID-19 pandemic has highlighted the importance of single-cell sequencing in understanding host–pathogen interactions. Researchers have used this technology to characterize the immune response to SARS-CoV-2 infection at the cellular level, informing vaccine development and identifying potential therapeutic targets.Challenges & Future Directions

While single-cell sequencing has undoubtedly transformed biomedical research, several challenges and opportunities remain:- Data analysis and integration - The sheer volume and ever-increasing complexity of single-cell sequencing datasets require sophisticated bioinformatics tools and analytical approaches. Developing robust algorithms for data integration and interpretation is crucial for maximizing the impact of this technology.

- Spatial context - Traditional single-cell sequencing methods lose information about the spatial organization of cells within tissues. Emerging spatial transcriptomics technologies aim to address this limitation, providing both transcriptional and spatial information.

- Multi-omics integration - Combining single-cell sequencing with other omics technologies, such as proteomics and epigenomics, will provide a more comprehensive understanding of cellular function and disease mechanisms.

- Clinical translation - While single-cell sequencing has shown immense potential in research settings, translating these insights into clinical applications remains a challenge. Developing standardized protocols and streamlined workflows for clinical implementation is essential for realizing the full potential of this technology in precision medicine.

Unraveling the DNA of Data: A Comprehensive Guide to Bioinformatics

| Miscellaneous

Defining Bioinformatics

At its core, bioinformatics is the application of computational tools and methods to collect, store, analyze, and disseminate biological data and information. It emerged as a distinct field in the 1970s but gained significant momentum with the advent of high-throughput sequencing technologies and the exponential growth of biological data in the 1990s and 2000s. Bioinformatics serves as a bridge between the vast amounts of raw biological data generated by modern laboratory techniques and the meaningful insights that can be derived from this data. It encompasses a wide range of activities, from the development of algorithms for analyzing DNA sequences to efficiently storing and retrieving complex biological information in databases.The Fundamental Components of Bioinformatics

Biological Data The foundation of bioinformatics is biological data. This includes:- Genomic sequences (DNA and RNA)

- Protein sequences and structures

- Gene expression data (RNA)

- Metabolomic data

- Phylogenetic information

- Sequence alignment algorithms (e.g., BLAST, Clustal)

- Gene prediction tools

- Protein structure prediction software

- Phylogenetic tree construction methods

- Variant calling methodologies/tools (e.g., Mutect2)

- Expression and transcriptomic analysis (e.g., DESeq2, edgeR, CellRanger, salmon)

- Machine learning and data mining techniques

- GenBank for nucleotide sequences

- UniProt for protein sequences and functional information

- PDB (Protein Data Bank) for 3D structures of proteins and nucleic acids

- KEGG for metabolic pathways

Key Applications of Bioinformatics

Transcriptomics, Genomics, & Proteomics The quantification and characterization of all aspects of the “central dogma” of biology (DNA->RNA->protein) is a critical component of bioinformatics analysis. Bioinformatics tools are essential for:- Assembling and annotating genomes

- Identifying genes and predicting their functions

- Comparing genomes across species

- Comparing genomic variants across populations and individual samples

- Analyzing protein sequences and structures

- Predicting protein-protein interactions

- Quantifying the amount of individual transcripts and/or proteins in an individual sample/cell

- Construct phylogenetic trees

- Identify conserved sequences across species

- Study the evolution of genes and genomes

- Analyze population genetics data

- Identifying potential drug targets and biomarkers

- Predicting drug-protein interactions

- Analyzing the results of high-throughput screening

- Designing personalized medicine approaches

- Analyzing individual genomes to identify disease risk factors

- Predicting drug responses based on genetic markers and other biomarkers

- Designing targeted therapies for cancer and other diseases

- Integrating diverse data types (genomic, proteomic, clinical) for comprehensive patient profiles

Challenges in Bioinformatics